MPLS with common services

1. Intro

We did some initial MPLS explorations in

our first MPLS lab.

However this was just to see how MPLS works. Now, we are actually going to do

something with MPLS, like connecting systems to it or routing client traffic.

To do this, a simpler MPLS network is used. The focus is now on the routing

through the MPLS rather than the MPLS itself.

We'll use the lab provided by

Packetlife

Downloading the lab has proved not to be a good idea because my environment

differs so much from PacketLife's, that whole new error messages popped-up.

Also, I will use a different set of IP addresses.

-

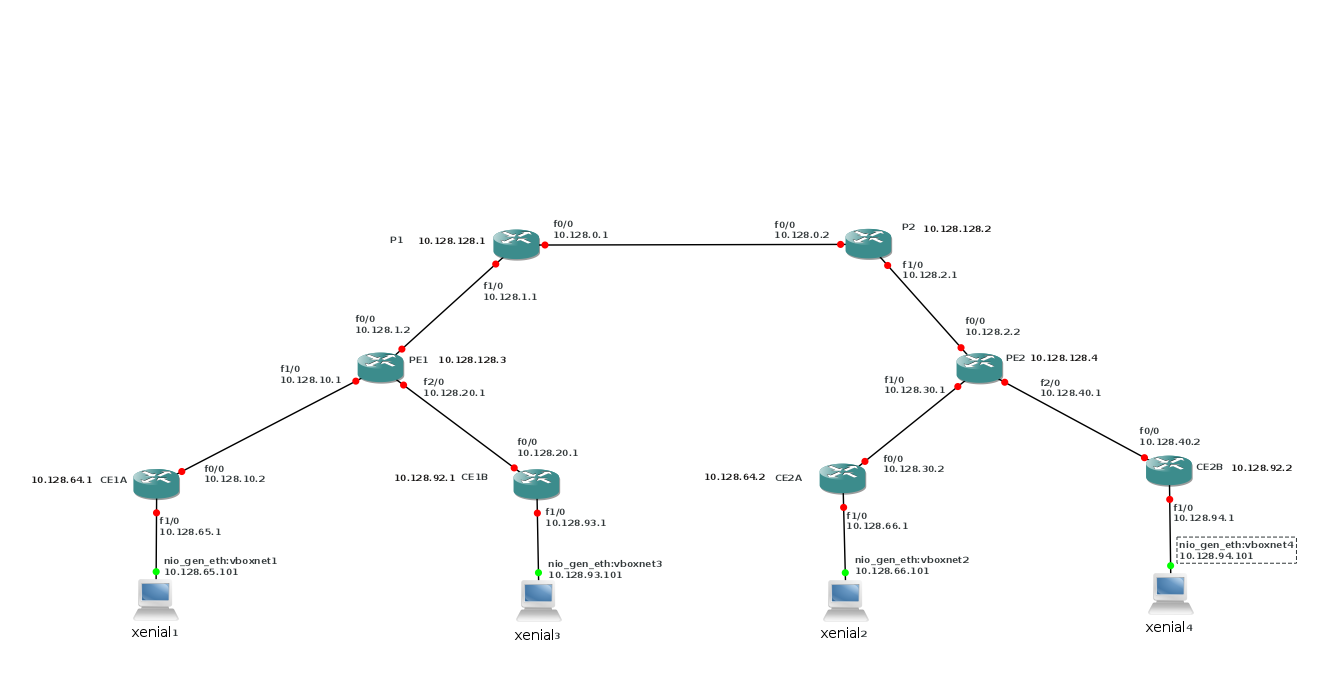

2. The network

As said, our network differs a bit from Packetlife's. Being network-people, Packetlife

likes to use loopback interfaces, but we have complete virtual machines, so why settle

for less?

2.1. Two customers MPLS network

As image I used c3660-jk9o3s-mz.124-17.bin and I put fast-ethernet cards in all slots.

The Vagrantfile is:

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Vagrantfile API/syntax version. Don't touch unless you know what you're doing!

# Vagrant.configure("2") do |config|

# config.vm.box = "minimal/xenial64"

# end

#

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.define :xenial1 do |t|

t.vm.box = "ubuntu/xenial64"

# t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet1" ]

end

t.vm.provision "shell", path: "./setup.xenial1.sh"

end

config.vm.define :xenial2 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet2" ]

end

t.vm.provision "shell", path: "./setup.xenial2.sh"

end

config.vm.define :xenial3 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet3" ]

end

t.vm.provision "shell", path: "./setup.xenial3.sh"

end

config.vm.define :xenial4 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet4" ]

end

t.vm.provision "shell", path: "./setup.xenial4.sh"

end

config.vm.define :xenial5 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet5" ]

end

t.vm.provision "shell", path: "./setup.xenial5.sh"

end

end

On our P and PE routers, we've enabled MPLS. As a default, LDP is enabled.

It runs between the loopback interfaces of the routers.

The interfaces can connect because we run OSPF on the MPLS-routers.

You can see what OSPF does:

P1#sh ip OSPF DATABASE OSPF Router with ID (10.128.128.1) (Process ID 1) Router Link States (Area 0) Link ID ADV Router Age Seq# Checksum Link count 10.128.128.1 10.128.128.1 20 0x80000003 0x000BAE 3 10.128.128.2 10.128.128.2 20 0x80000003 0x004D66 3 10.128.128.3 10.128.128.3 23 0x80000002 0x00F1E9 2 10.128.128.4 10.128.128.4 24 0x80000002 0x000EC8 2 Net Link States (Area 0) Link ID ADV Router Age Seq# Checksum 10.128.0.2 10.128.128.2 25 0x80000001 0x000186 10.128.1.2 10.128.128.3 23 0x80000001 0x00F98A 10.128.2.2 10.128.128.4 25 0x80000001 0x00017F

and on the wire between P1 and P2, you'll see the OSPF packets:

You will also notice that the CE-routers are not in the OSPF database.

2.2. Routing on the PE

We defined VRFs on the PE routers for each customer:

ip vrf Customer_A rd 65000:1 route-target export 65000:1 route-target import 65000:1 route-target import 65000:99 ! ip vrf Customer_B rd 65000:2 route-target export 65000:2 route-target import 65000:2 route-target import 65000:99

We used the same route distinguisher (RD) on both PE routers. Best practice is to use a different RD

on each VRF. RDs are used to make routes globally unique. We use the same RD on both PE routers, because

we are sure that the routes that are advertised are already unique per customer (A and B). If the network

behind the CE routers is more dynamic, do not use the same RDs.

Route-targets and route-distinguishers are used to define the separate customers.

With the route-targets we can define which VRF sees which VRF.

We used the format :; customer 99 is for later use.

The VRFs are defined on the interfaces that connect the CE-routers:

! interface FastEthernet1/0 ip vrf forwarding Customer_A ip address 10.128.10.1 255.255.255.0 ip ospf 2 area 0 duplex auto speed auto ! interface FastEthernet2/0 ip vrf forwarding Customer_B ip address 10.128.20.1 255.255.255.0 ip ospf 3 area 0 duplex auto speed auto !

And you can see that it works:

PE1#sh ip vrf interfaces Interface IP-Address VRF Protocol Fa1/0 10.128.10.1 Customer_A up Fa2/0 10.128.20.1 Customer_B up

The next step

is the routing of client traffic.

router bgp 65000 bgp log-neighbor-changes neighbor 10.128.128.4 remote-as 65000 neighbor 10.128.128.4 update-source Loopback0 neighbor 10.128.128.5 remote-as 65000 neighbor 10.128.128.5 update-source Loopback0 no auto-summary ! address-family vpnv4 neighbor 10.128.128.4 activate neighbor 10.128.128.4 send-community extended neighbor 10.128.128.5 activate neighbor 10.128.128.5 send-community extended exit-address-family ! address-family ipv4 vrf Customer_B redistribute ospf 3 vrf Customer_B no synchronization exit-address-family ! address-family ipv4 vrf Customer_A redistribute ospf 2 vrf Customer_A no synchronization exit-address-family !

So what does that mean?

router bgp 65000 neighbor 10.128.128.4 remote-as 65000

adds the other PE router to the BGP table. Our autonomous system (AS) is 65000, which is

often used for private networks.

neighbor 10.128.128.4 update-source Loopback0

we define the loopback interface as the operational interface for the TCP connections.

address-family vpnv4 neighbor 10.128.128.4 activate neighbor 10.128.128.4 send-community extended neighbor 10.128.128.5 activate neighbor 10.128.128.5 send-community extended exit-address-family

This defines the VPNs. The RDs make the routes unique, even if customers use the

same address space (e.g. 10.0.0.0/8). We've seen the RDs in the VRF definition above.

the router 10.128.128.5 is for later use.

address-family ipv4 vrf Customer_B redistribute ospf 3 vrf Customer_B no synchronization exit-address-family address-family ipv4 vrf Customer_A redistribute ospf 2 vrf Customer_A no synchronization exit-address-family

finaly allows OSPF with the CE routers.

It is important to see that the complete separation of customers is handled by the provider.

This has some security implications. If you are a provider, you will need to be in complete

control of the separation to fulfill your contractual obligations. As a client, you will want

to make sure that your provider takes care of that separation. This can be done through contracts,

if you trust your provider enough, or through read rights on the PE-routers. And that could

be problematic. A solution would be to have a right to audit, as is often provided by the

contracts. You might also do some security monitoring here; IDS could be a valid requirement.

2.3. The resulting network

Now we can ping between xenial1/xenial2 and xenial3/xenial4, but not from xenial1 to xenial3.

As an example:

ljm[MPLS_common_services]$ vagrant ssh xenial1 -c 'ping -c2 10.128.66.101' PING 10.128.66.101 (10.128.66.101) 56(84) bytes of data. 64 bytes from 10.128.66.101: icmp_req=1 ttl=58 time=142 ms 64 bytes from 10.128.66.101: icmp_req=2 ttl=58 time=118 ms --- 10.128.66.101 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 118.239/130.381/142.524/12.147 ms Connection to 127.0.0.1 closed. ljm[MPLS_common_services]$ vagrant ssh xenial1 -c 'ping -c2 10.128.93.101' PING 10.128.93.101 (10.128.93.101) 56(84) bytes of data. From 10.128.65.1 icmp_seq=1 Destination Host Unreachable From 10.128.65.1 icmp_seq=2 Destination Host Unreachable --- 10.128.93.101 ping statistics --- 2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1001ms Connection to 127.0.0.1 closed. ljm[MPLS_common_services]$ vagrant ssh xenial1 -c 'traceroute 10.128.66.101' traceroute to 10.128.66.101 (10.128.66.101), 30 hops max, 60 byte packets 1 10.128.65.1 (10.128.65.1) 14.152 ms 14.034 ms 13.952 ms 2 10.128.10.1 (10.128.10.1) 34.125 ms 34.014 ms 33.926 ms 3 10.128.1.1 (10.128.1.1) 115.482 ms 115.421 ms 115.344 ms 4 10.128.0.2 (10.128.0.2) 115.265 ms 115.189 ms 115.111 ms 5 10.128.30.1 (10.128.30.1) 94.729 ms 94.675 ms 94.598 ms 6 10.128.30.2 (10.128.30.2) 114.787 ms 103.218 ms 103.113 ms 7 10.128.66.101 (10.128.66.101) 123.240 ms 117.791 ms 117.928 ms Connection to 127.0.0.1 closed.

What we see in the traceroute is that all the MPLS routers are seen. This can

be hidden with

no mpls propagate-ttl.

See

https://www.cisco.com/c/en/us/support/docs/multiprotocol-label-switching-mpls/mpls/26585-mpls-traceroute.html

for details on the traceroute.

3. Common Service

A provider may want to offer a common service to all his clients. This may

vary from Internet access to a website where they can find their billing information.

The common service could also be used to give partners or vustomers access to

your own network.

We've seen that the provider defines the separation of customers on the PE routers.

The provider can also provide a common services network. If such a service is provided,

the provider must be sure that there are no conflicts between IP addresses. Also, there may

be other requirements on the common service; it should not be possible to use the common

service as a stepping stone to other clients.

3.1. Our common service network

Our common service is a simple web server. On my xenial5 machine, I have a webserver

running.

We'll connect xenial5 directly to the PE router; no CE router is used.

3.2. Routing

On PE3, a single VRF is created.

ip vrf services rd 65000:99 route-target both 65000:99 route-target import 65000:1 route-target import 65000:2

We only import the routes from 65000:1 and 65000:2 but we do not export them.

All PE routers need to be neighbors (here for PE3):

! router bgp 65000 no synchronization bgp log-neighbor-changes neighbor 10.128.128.3 remote-as 65000 neighbor 10.128.128.3 update-source Loopback0 neighbor 10.128.128.4 remote-as 65000 neighbor 10.128.128.4 update-source Loopback0 no auto-summary ! address-family vpnv4 neighbor 10.128.128.3 activate neighbor 10.128.128.3 send-community extended neighbor 10.128.128.4 activate neighbor 10.128.128.4 send-community extended exit-address-family ! address-family ipv4 vrf services redistribute connected exit-address-family !

The following table might make it more clear what we're doing

router

|

vrf

|

1

|

2

|

99

|

PE1

|

cust_a

|

both

|

-

|

import

|

PE1

|

cust_b

|

-

|

both

|

import

|

PE2

|

cust_a

|

both

|

-

|

import

|

PE2

|

cust_b

|

-

|

both

|

import

|

PE3

|

serv.

|

import

|

import

|

both

|

The PE3 therefore does not export the routes for customer A and B.

It is important to realize that all this is done on the PE routers and therefore under

the control of the MPLS provider. The customers A and B have no control over the routing

through the MPLS cloud.

3.3. The result.

The result is that both A and B can see the common service, but they cannot see eachother.

See, for example xenial1:

ljm$ vagrant ssh xenial1 -c 'ping -c2 10.128.192.101' PING 10.128.192.101 (10.128.192.101) 56(84) bytes of data. 64 bytes from 10.128.192.101: icmp_req=1 ttl=60 time=103 ms 64 bytes from 10.128.192.101: icmp_req=2 ttl=60 time=80.1 ms --- 10.128.192.101 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1000ms rtt min/avg/max/mdev = 80.147/91.986/103.825/11.839 ms Connection to 127.0.0.1 closed. ljm$ vagrant ssh xenial1 -c 'ping -c2 10.128.93.101' PING 10.128.93.101 (10.128.93.101) 56(84) bytes of data. From 10.128.65.1 icmp_seq=1 Destination Host Unreachable From 10.128.65.1 icmp_seq=2 Destination Host Unreachable --- 10.128.93.101 ping statistics --- 2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1001ms Connection to 127.0.0.1 closed.

On xenial5, every other system can be seen:

ljm$ vagrant ssh xenial5 -c 'ping -c1 10.128.66.101' PING 10.128.66.101 (10.128.66.101) 56(84) bytes of data. 64 bytes from 10.128.66.101: icmp_req=1 ttl=60 time=81.9 ms --- 10.128.66.101 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 81.989/81.989/81.989/0.000 ms Connection to 127.0.0.1 closed. ljm$ vagrant ssh xenial5 -c 'ping -c1 10.128.94.101' PING 10.128.94.101 (10.128.94.101) 56(84) bytes of data. 64 bytes from 10.128.94.101: icmp_req=1 ttl=60 time=84.8 ms --- 10.128.94.101 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 84.820/84.820/84.820/0.000 ms Connection to 127.0.0.1 closed.

On xenial1:

ljm$ vagrant ssh xenial1 -c 'wget -O- 10.128.192.101' --2018-06-06 11:32:38-- http://10.128.192.101/ Connecting to 10.128.192.101:80... connected. HTTP request sent, awaiting response... 200 OK Length: 177 text/html Saving to: `STDOUT' <p>This is the default web page for this server.</p> <p>The web server software is running but no content has been added, yet.</p> </body></html> 2018-06-06 11:32:38 (9.63 MB/s) - written to stdout 177/177 Connection to 127.0.0.1 closed.

This means that it all works according to plan.

3.4. Trouble in paradise

We want our networks separated. Look then at this:

ljm$ vagrant ssh xenial1 Welcome to Ubuntu 12.04 LTS (GNU/Linux 3.2.0-23-generic x86_64) * Documentation: https://help.ubuntu.com/ New release '14.04.5 LTS' available. Run 'do-release-upgrade' to upgrade to it. Welcome to your Vagrant-built virtual machine. Last login: Wed Jun 6 11:32:38 2018 from 10.0.2.2 vagrant@xenial64:~$ ssh 10.128.192.101 The authenticity of host '10.128.192.101 (10.128.192.101)' can't be established. ECDSA key fingerprint is 11:5d:55:29:8a:77:d8:08:b4:00:9b:a3:61:93:fe:e5. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '10.128.192.101' (ECDSA) to the list of known hosts. vagrant@10.128.192.101's password: Welcome to Ubuntu 12.04 LTS (GNU/Linux 3.2.0-23-generic x86_64) * Documentation: https://help.ubuntu.com/ New release '14.04.5 LTS' available. Run 'do-release-upgrade' to upgrade to it. Welcome to your Vagrant-built virtual machine. Last login: Wed Jun 6 11:29:42 2018 from 10.0.2.2 vagrant@xenial64:~$ ssh 10.128.93.101 The authenticity of host '10.128.93.101 (10.128.93.101)' can't be established. ECDSA key fingerprint is 11:5d:55:29:8a:77:d8:08:b4:00:9b:a3:61:93:fe:e5. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '10.128.93.101' (ECDSA) to the list of known hosts. vagrant@10.128.93.101's password: Welcome to Ubuntu 12.04 LTS (GNU/Linux 3.2.0-23-generic x86_64) * Documentation: https://help.ubuntu.com/ New release '14.04.5 LTS' available. Run 'do-release-upgrade' to upgrade to it. Welcome to your Vagrant-built virtual machine. Last login: Fri Sep 14 06:23:18 2012 from 10.0.2.2 vagrant@xenial64:~$

I now abused our common service to hop from customer A to customer B. Ofcourse,

in real life, you will never allow logging in to the common service from

any of the customer networks. But many allow logging in from the Internet and consider

this safe enough. Here, not only your own network might be compromised, but also

those of all the customers.

A Router configs

A1. CE1A.cfg

hostname CE1A ip cef no ip domain lookup ! interface Loopback0 ip address 10.128.64.1 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.10.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto interface FastEthernet1/0 ip address 10.128.65.1 255.255.255.0 ip ospf network point-to-point ip ospf 1 area 0 duplex auto speed auto router ospf 1 router-id 10.128.64.1 log-adjacency-changes end

A2. CE1B.cfg

hostname CE1B ip cef no ip domain lookup interface Loopback0 ip address 10.128.92.1 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.20.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto interface FastEthernet1/0 ip address 10.128.93.1 255.255.255.0 ip ospf network point-to-point ip ospf 1 area 0 duplex auto speed auto router ospf 1 router-id 10.128.92.1 log-adjacency-changes no ip http server no ip http secure-server ip forward-protocol nd end

A3. CE2A.cfg

hostname CE2A ip cef no ip domain lookup ! interface Loopback0 ip address 10.128.64.2 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.30.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto interface FastEthernet1/0 ip address 10.128.66.1 255.255.255.0 ip ospf network point-to-point ip ospf 1 area 0 duplex auto speed auto router ospf 1 router-id 10.128.64.2 log-adjacency-changes no ip http server no ip http secure-server ip forward-protocol nd end

A4. CE2B.cfg

hostname CE2B ip cef no ip domain lookup ! interface Loopback0 ip address 10.128.92.2 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.40.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto interface FastEthernet1/0 ip address 10.128.94.1 255.255.255.0 ip ospf network point-to-point ip ospf 1 area 0 duplex auto speed auto router ospf 1 router-id 10.128.92.2 log-adjacency-changes no ip http server no ip http secure-server ip forward-protocol nd control-plane end

A5. P1.cfg

hostname P1 ip cef no ip domain lookup ! interface Loopback0 ip address 10.128.128.1 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.0.1 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet0/1 ip address 10.128.3.1 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet1/0 ip address 10.128.1.1 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip router ospf 1 router-id 10.128.128.1 log-adjacency-changes no ip http server no ip http secure-server ip forward-protocol nd end

A6. P2.cfg

hostname P2 ip cef no ip domain lookup ! interface Loopback0 ip address 10.128.128.2 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.0.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet0/1 ip address 10.128.4.1 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet1/0 ip address 10.128.2.1 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip router ospf 1 router-id 10.128.128.2 log-adjacency-changes no ip http server no ip http secure-server ip forward-protocol nd end

A7. PE1.cfg

hostname PE1 ip cef no ip domain lookup ip vrf Customer_A rd 65000:1 route-target export 65000:1 route-target import 65000:1 route-target import 65000:99 ip vrf Customer_B rd 65000:2 route-target export 65000:2 route-target import 65000:2 route-target import 65000:99 ! interface Loopback0 ip address 10.128.128.3 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.1.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet1/0 ip vrf forwarding Customer_A ip address 10.128.10.1 255.255.255.0 ip ospf 2 area 0 duplex auto speed auto interface FastEthernet2/0 ip vrf forwarding Customer_B ip address 10.128.20.1 255.255.255.0 ip ospf 3 area 0 duplex auto speed auto router ospf 2 vrf Customer_A router-id 10.128.10.1 log-adjacency-changes redistribute bgp 65000 subnets router ospf 3 vrf Customer_B router-id 10.128.20.1 log-adjacency-changes redistribute bgp 65000 subnets router ospf 1 router-id 10.128.128.3 log-adjacency-changes router bgp 65000 no synchronization bgp log-neighbor-changes neighbor 10.128.128.4 remote-as 65000 neighbor 10.128.128.4 update-source Loopback0 neighbor 10.128.128.5 remote-as 65000 neighbor 10.128.128.5 update-source Loopback0 no auto-summary ! address-family vpnv4 neighbor 10.128.128.4 activate neighbor 10.128.128.4 send-community extended neighbor 10.128.128.5 activate neighbor 10.128.128.5 send-community extended exit-address-family ! address-family ipv4 vrf Customer_B redistribute ospf 3 vrf Customer_B no synchronization exit-address-family ! address-family ipv4 vrf Customer_A redistribute ospf 2 vrf Customer_A no synchronization exit-address-family ip forward-protocol nd end

A8. PE2.cfg

hostname PE2 ip cef no ip domain lookup ip vrf Customer_A rd 65000:1 route-target export 65000:1 route-target import 65000:1 route-target import 65000:99 ip vrf Customer_B rd 65000:2 route-target export 65000:2 route-target import 65000:2 route-target import 65000:99 ! interface Loopback0 ip address 10.128.128.4 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.2.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet1/0 ip vrf forwarding Customer_A ip address 10.128.30.1 255.255.255.0 ip ospf 2 area 0 duplex auto speed auto interface FastEthernet2/0 ip vrf forwarding Customer_B ip address 10.128.40.1 255.255.255.0 ip ospf 3 area 0 duplex auto speed auto router ospf 2 vrf Customer_A router-id 10.128.30.1 log-adjacency-changes redistribute bgp 65000 subnets router ospf 3 vrf Customer_B router-id 10.128.40.1 log-adjacency-changes redistribute bgp 65000 subnets router ospf 1 log-adjacency-changes router bgp 65000 no synchronization bgp log-neighbor-changes neighbor 10.128.128.3 remote-as 65000 neighbor 10.128.128.3 update-source Loopback0 neighbor 10.128.128.5 remote-as 65000 neighbor 10.128.128.5 update-source Loopback0 no auto-summary ! address-family vpnv4 neighbor 10.128.128.3 activate neighbor 10.128.128.3 send-community extended neighbor 10.128.128.5 activate neighbor 10.128.128.5 send-community extended exit-address-family ! address-family ipv4 vrf Customer_B redistribute ospf 3 vrf Customer_B no synchronization exit-address-family ! address-family ipv4 vrf Customer_A redistribute ospf 2 vrf Customer_A no synchronization exit-address-family ip forward-protocol nd end

A9. PE3.cfg

hostname PE3 ip cef no ip domain lookup ip vrf services rd 65000:99 route-target both 65000:99 route-target import 65000:1 route-target import 65000:2 ! interface Loopback0 ip address 10.128.128.5 255.255.255.255 ip ospf network point-to-point ip ospf 1 area 0 interface FastEthernet0/0 ip address 10.128.3.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet0/1 ip address 10.128.4.2 255.255.255.0 ip ospf 1 area 0 duplex auto speed auto mpls ip interface FastEthernet1/0 ip vrf forwarding services ip address 10.128.192.1 255.255.255.0 duplex auto speed auto router ospf 1 log-adjacency-changes router bgp 65000 no synchronization bgp log-neighbor-changes neighbor 10.128.128.3 remote-as 65000 neighbor 10.128.128.3 update-source Loopback0 neighbor 10.128.128.4 remote-as 65000 neighbor 10.128.128.4 update-source Loopback0 no auto-summary ! address-family vpnv4 neighbor 10.128.128.3 activate neighbor 10.128.128.3 send-community extended neighbor 10.128.128.4 activate neighbor 10.128.128.4 send-community extended exit-address-family ! address-family ipv4 vrf services redistribute connected exit-address-family ! ip forward-protocol nd end

B Virtual machines

B1. Vagrantfile

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Vagrantfile API/syntax version. Don't touch unless you know what you're doing!

# Vagrant.configure("2") do |config|

# config.vm.box = "minimal/xenial64"

# end

#

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.define :xenial1 do |t|

t.vm.box = "ubuntu/xenial64"

# t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet1" ]

end

t.vm.provision "shell", path: "./setup.xenial1.sh"

end

config.vm.define :xenial2 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet2" ]

end

t.vm.provision "shell", path: "./setup.xenial2.sh"

end

config.vm.define :xenial3 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet3" ]

end

t.vm.provision "shell", path: "./setup.xenial3.sh"

end

config.vm.define :xenial4 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet4" ]

end

t.vm.provision "shell", path: "./setup.xenial4.sh"

end

config.vm.define :xenial5 do |t|

t.vm.box = "ubuntu/xenial64"

#t.vm.box_url = "file://links/virt_comp/vagrant/boxes/xenial64.box"

t.vm.provider "virtualbox" do |prov|

prov.customize ["modifyvm", :id, "--nic2", "hostonly", "--hostonlyadapter2", "vboxnet5" ]

end

t.vm.provision "shell", path: "./setup.xenial5.sh"

end

end

B1.1. setup.xenial1.sh

#!/bin/bash ETH1=$(dmesg | grep -i 'renamed from eth1' | sed -n 's/: renamed from eth1//;s/.* //p') ifconfig $ETH1 10.128.65.101 netmask 255.255.255.0 up route add -net 10.128.0.0 netmask 255.128.0.0 gw 10.128.65.1

B1.2. setup.xenial2.sh

#!/bin/bash ETH1=$(dmesg | grep -i 'renamed from eth1' | sed -n 's/: renamed from eth1//;s/.* //p') ifconfig $ETH1 10.128.66.101 netmask 255.255.255.0 up route add -net 10.128.0.0 netmask 255.128.0.0 gw 10.128.66.1

B1.3. setup.xenial3.sh

#!/bin/bash ETH1=$(dmesg | grep -i 'renamed from eth1' | sed -n 's/: renamed from eth1//;s/.* //p') ifconfig $ETH1 10.128.93.101 netmask 255.255.255.0 up route add -net 10.128.0.0 netmask 255.128.0.0 gw 10.128.93.1

B1.4. setup.xenial4.sh

#!/bin/bash ETH1=$(dmesg | grep -i 'renamed from eth1' | sed -n 's/: renamed from eth1//;s/.* //p') ifconfig $ETH1 10.128.94.101 netmask 255.255.255.0 up route add -net 10.128.0.0 netmask 255.128.0.0 gw 10.128.94.1

B1.5. setup.xenial5.sh

#!/bin/bash ETH1=$(dmesg | grep -i 'renamed from eth1' | sed -n 's/: renamed from eth1//;s/.* //p') ifconfig $ETH1 10.128.192.101 netmask 255.255.255.0 up route add -net 10.128.0.0 netmask 255.128.0.0 gw 10.128.192.1 apt-get -y update apt-get -y install apache2

-

C Versions

version

|

date

|

comment

|

1

|

2016-02

|

Packetlife replay and adaptation

|

2

|

2018-06

|

Changed hosts to vagrant machines.

|

3

|

2020-02

|

Changed precise to xenial

|

-